|

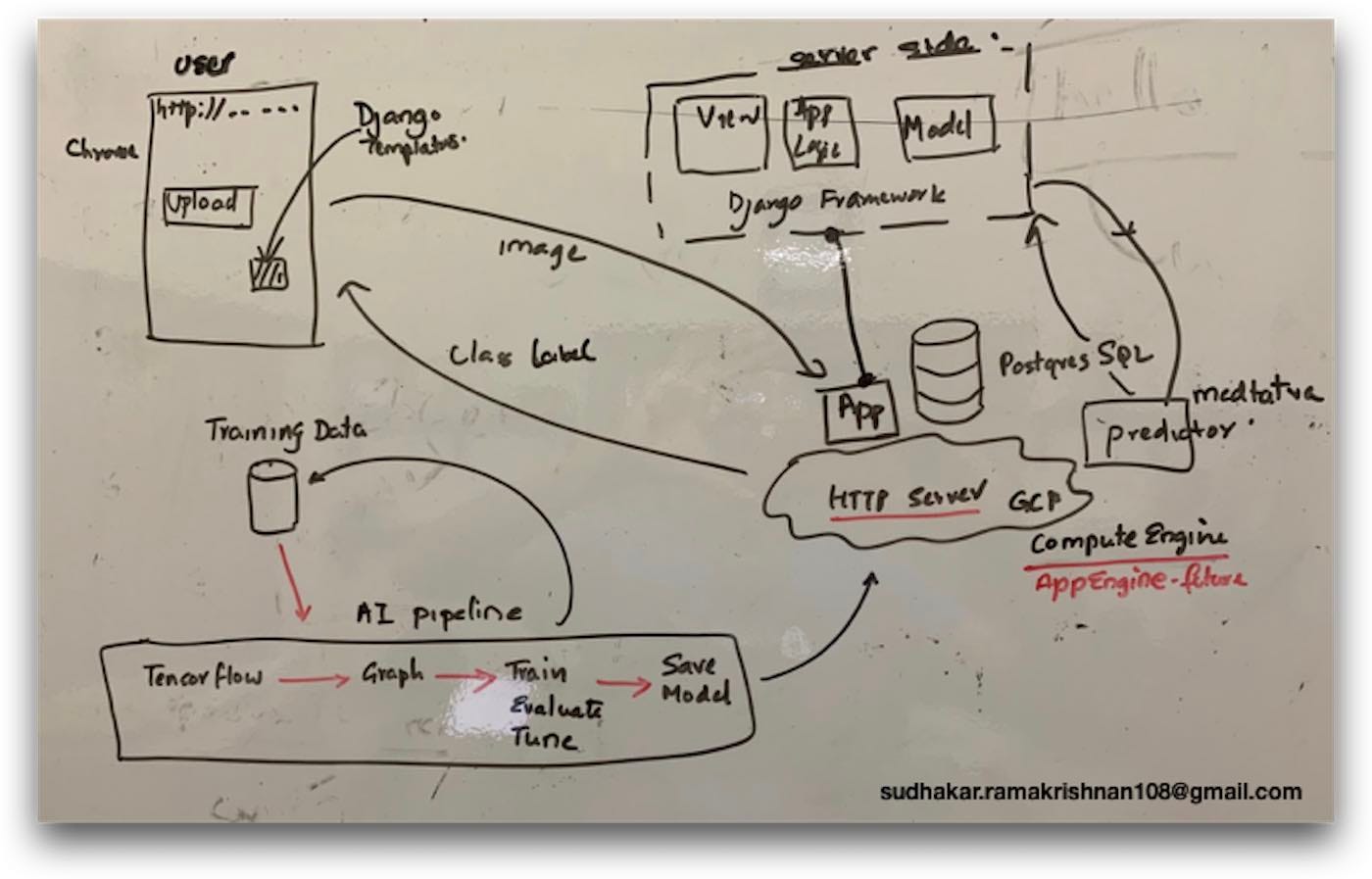

| Deployment of a XRay App into Cloud |

10 Troubleshooting tips to ease your cloud deployment; & why custom runtime environment can give you 10x deployment speed.

Popular images, provided by bitnami, is one way I thought I could hit the market faster — a fully configured Django Certified development stack. I thought my deployment would be a breeze. But, that excitement was short lived. Plethora of issues from ssl configs to conflicts with pre-installed runtimes ate into my dev time, slower builds, expensive debugging.

In this article, I address issues I encountered while deploying a tensor-flow XRay processing app on AppEngine standard & flex, and a Certified Cloud Stack. Towards the end, I provide a short narrative as to why startups should seriously consider using a custom runtime in the App Engine flexible environment.

AppEngine — AE Issues (1–5), Certified Software Stack (6–10)

- AE: dependencies on tensorflow==1.15.0

- AE: builder error: error [Errno 12] Cannot allocate memory

- Application startup error! Code: VM_DISK_FULL

- AE: APP_CONTAINER_CRASHED

- AE: gcloud app deploy complains “Too many open files”

- Python2 vs python3 woos: working with bitnami stack

- configuring permission on /media and /static

- Running with off the shelf django (preinstalled httpd.conf)

- SSL Termination woos

- Postgres Database issues

AppEngine Issues

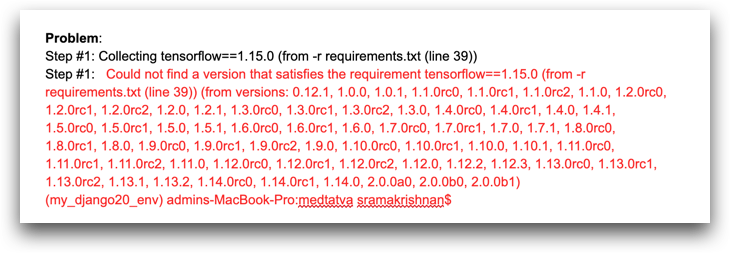

1. Error: Could not find a version that satisfies the requirement tensorflow==1.15.0 (from -r requirements.txt)

|

| Tensorflow package refuses to install |

Solution: In AppEngine, default pip is already installed if you are using Python 2 >=2.7.9. But, that version of pip is pip 10. Only python packages installable with pip (the one supplied) can be installed using the requirements.txt method. However, tensorflow 1.15.0 is first available only in pip 19.0.0.

To satisfy any other dependencies, including pip itself, you can build a custom runtime tailored exactly to your needs. This gave me a lot of flexibility.

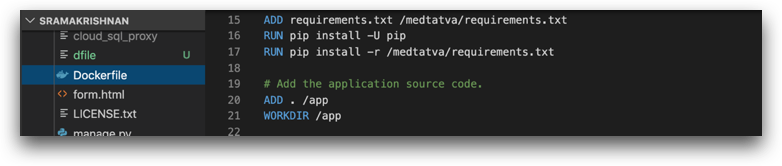

Update Dockerfile as instructed in the link above

Add command to your Dockerfile to install pip:

|

| Dockerfile: run pip to install latest version of pip, then copy the application’s requirements.txt |

2. “builder”: exited with error [Errno 12] Cannot allocate memory google cloud

Cause: OSError: [Errno 12] Cannot allocate memory sounds more like a RAM problem, not a GPU problem. Check you have enough RAM/SWAP, and the correct user permissions.Solution: A few changes I made in app.yaml solved the issue.

runtime: custom env: flexruntime_config: python_version: 3readiness_check: app_start_timeout_sec: 1800resources: cpu: 2 memory_gb: 4 disk_size_gb: 30

This also fixed Application startup error! Code:APP_CONTAINER_CRASHED”

3. ERROR: (gcloud.app.deploy) Error Response: [9] Application startup error! Code: VM_DISK_FULL

Cause: No sufficient free disk space left for your App Engine Flexible application.Solution: Please increase your VM instances disk size in the resource settings in the app.yaml file for your deployment and retry.resources:

resources:

cpu: 2

memory_gb: 4

disk_size_gb: 30

4. Problem: APP_CONTAINER_CRASHED after gunicorn invocation | ModuleNotFoundError: No module named ‘wsgi’

Cause: Unable to find application objectSolution: Path to your application object within your wsgi file should be python callable (function). In Django, it is named application. So, <modulename>.wsgi:application would call the object, but in other frameworks, you would to refer to the wsg.py file to find the application object.

# Run a WSGI server to serve the application. gunicorn must be # declared as a dependency in requirements.txt.EXPOSE 8080 CMD [“gunicorn”, “ — bind”, “:8080”, “medtatva.wsgi:application”]

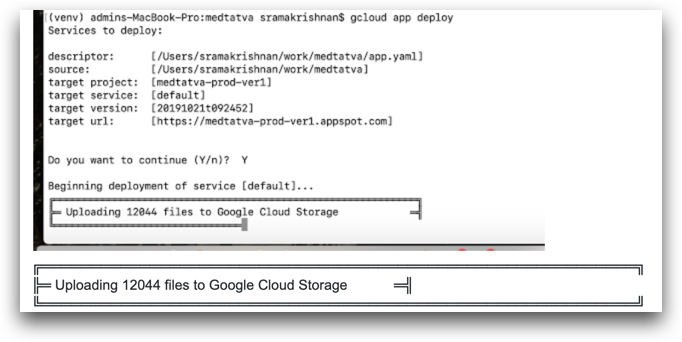

5. gcloud app deploy complains “Too many open files”

Cause: gcloud app deploy limits new versions to 10,000 files for an app.Solution: If your static file directory has too many files from javascript libraries you use, don’t install them directly using gcloud app deploy, instead use docker — run bower install (Javascript libraries of your choice). If you application relies on lots of files, consider using Google Storage.

In your Dockerfile:npm install -g bower run bower install --save dojo/dojo dojo/dijit dojo/dojox

|

| Some components can be heavyweight in terms of # of files, a case to go with custom runtime |

|

| gcloud app deploy hitting the 10K daily quota limit |

Bitnami Django Certified Stack Issues

6. Python2 vs python3 woos: working with bitnami stack

Problem: Bitnami instance I used came pre-packaged with Python2.x. The packaged Django 2.2.6 also runs on the same version. Our project had Python3.x as its core dependency both for Django, third party python libraries and also Tensor Flow.Solution: The Python3.7 had to be compiled using lzml compression and installed into /opt/Python3.x/. This binary had to be then exported into the PATH variable so that calls to python3 can get routed. Then, use the pip3 of the compiled Python3.x to set-up virtual environment and install the dependencies in the requirements.txt. Throughout the Django code, then reference python3 using /usr/bin/env/python as otherwise it would get routed to the system python2.x causing vague issues.

7. Apache Issues : configuring permission on /media and /static directories

Problem: Apache Permission issues with ‘static’, ‘media’ folderSolution: The Media folder needed ‘daemon’ to be the group and bitnami to be the owner. Only then it would run.

8. Problem: Running with off the shelf django (preinstalled httpd.conf)

Solution: We have the specific ‘conf’ that finally worked in our master branch ‘conf’ directory at the root

<IfDefine !IS_DJANGOSTACK_LOADED>Define IS_DJANGOSTACK_LOADED #WSGIDaemonProcess wsgi-djangostack python-home=/opt/Python3.7.4/bin/python3.7 processes=2 threads=15 display-name=%{GROUP} WSGIDaemonProcess <yourproject> python-home=/opt/bitnami/apps/django/django-project/venv/bin/python3.7 processes=2 threads=15 display-name=%{GROUP}</IfDefine><Directory "/opt/bitnami/apps/django/django_projects/<yourproject>/<appname>"> Options +MultiViews AllowOverride All<IfVersion >= 2.3> Require all granted </IfVersion>WSGIProcessGroup wsgi-djangostack WSGIApplicationGroup %{GLOBAL}</Directory>#Adding the lines below to set the PythonHome to the compliled python at /opt/Python3.7.4#WSGIPythonHome /opt/Python/3.7.4/bin/python3.7<Directory "/opt/bitnami/apps/django/django_projects/yourproject/static">Options FollowSymLinks Require all granted AllowOverride None</Directory><Directory "/opt/bitnami/apps/django/django_projects/<yourproject>/media">Options FollowSymLinks Require all granted AllowOverride None</Directory>#Alias /static "/opt/bitnami/python/lib/python3.7/site-packages/django/contrib/admin/static"Alias /static "/opt/bitnami/apps/django/django_projects/<yourproject>/static"Alias /media "/opt/bitnami/apps/django/django_projects/<yourproject>/media"WSGIScriptAlias /<yourproject> '/opt/bitnami/apps/django/django_projects/<yourproject>/<yourapp>/wsgi.py'#Include "/opt/bitnami/apps/django/django_projects/<yourproject>/conf/httpd-prefix.conf"

9. Getting SSL end point to work in certified cloud environment

Problem: “Warning: The domain ‘mydomain.com’ resolves to a different IP address than the one detected for this machine, which is ‘aa.bb.ccc.dddd’.”I ran into significant number of such issues to get the SSL termination to work with bncert tool provided with bitnami default. Though certified stack is supposed to have adequate support, don’t count on it.

Solution: You can refer to my stackoverflow query for detailed steps I followed to resolve the issue. Here is a short checklist to help you:

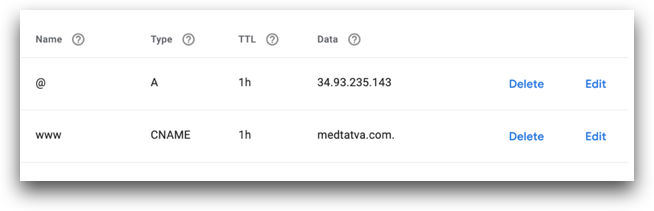

a) Do a nslookup mydomain and look at the dns server records. Then and foremost make sure u verify the CNAME and A record in your DNS page. You may need to change certain settings such as the domain name settings. If using Google Domains, the forwards actually don’t integrate the A + Cname to one static IP. I had to physically create A and CName records.

|

| Verify your DNS entry |

b) Is mod_ssl is installed?

(my_django20_env) sramakrishnan$ sudo /opt/bitnami/apache2/bin/apachectl -M | grep sslssl_module (shared)

d) Physically force the HTTPS redirection in web server settings.

Changes to /opt/bitnami/apache2/conf/bitnami/bitnami.conf :

# Changed RewriteRule ^/(.*) https://example.com/$1 [R,L]

RewriteRule ^/(.*) https://yourwebsite.com/$1 [R,L]

e) Fix project level settings in your project level conf direction, edit httpd-app.conf to ensure WSGIScriptAlias reflects your app’s wsgi.py (see Running with off the shelf django)

f) Ensure the right ALLOWED HOSTS is give access, the project root or your application root, depending upon where you serve from

References I found useful ssl readup, and SSL configuration generator at https://ssl-config.mozilla.org/. Note:Let’s Encrypt provides only DV certificates not OV or EV. The easiest way to set up Let’s Encrypt on your server is with Certbot. Another thread related to bncert tool I found useful.

10. Postgres Database issues

Problem: PG DB is created when the Google Cloud instance is generated. The postgres user password is the same as the application password. Any attempt to set it via SSH terminal using 'su passwd postgres' will fail.Solution: The PG database user postgres password was the ‘application password’ given by Google on instance set up. The documentation was not clear and we kept using ‘passwd postgres’ to set it and it kept failing. Also, we need to edit the pg_hba.conf file to allow it to listen to incoming TCP requests at 5432 port and allow authorisation via password [md5 method]. This is standard and is documented in PostgreSQL documentation.

Takeaways

Recollect the days of J2EE Containers? Long back, when I was at ESRI, and had to make GeoSpatial EJB framework compatible across vendors, I had to wrangle my way through many competing J2EE containers and related issues: from class-loaders to java.lang.ClassNotFoundException. Classpath issues always used to interfere with the order in which Class-loader needed to load these classes.Similarly, Certified stacks though useful come with their bag of runtime conflicts with your Django app requirements.txt and core runtimes you need. The question always boils to how much of a “clean & isolated” container runtime you wish to start with.

To get a better feel of 3 environments, I built a django-based library app and deployed the same to AppEngine standard, flex. Later I took the same library application, packaged it as a Docker image, and pushed the same to GKE — Google Compute Engine instances(with three polls pods in the cluster). I have published a detailed README@ https://github.com/suramakr/django-playground/blob/master/README.md. As you can see from the README, the GKE deployment needed a bit more work relative to AppEngine Flex with custom runtime. I shall consider a move to GKE as my architecture evolves over time. All project files I have used are public in my django playground@github.

Ultimately, I found my comfort zone in AppEngine Flexible environment with custom runtime, it gave me the speed-up, the ease & flexibility and, the needed control in destination environment to hit the ground running. My build is now more deterministic (runs in~7 minutes), all SSL auto-managed, auto-scaling, centralised logs and more…

Acknowledgement

I thank Dr.T.R.Easwar, for his inputs and collaboration while deploying the app.You can reach me at sudhakar.ramakrishnan108@gmail.com or www.svitaworld.com.

Comments

Post a Comment